Inside the Black Box of Large Language Models

When to run this activity?

I use this activity at the start of a workshop, after an ice breaker like the one with the AI Term Challenge and before moving on to more advanced activities.

What will they learn?

- LLMs are essentially next-word prediction machines

- They don't "think" or "understand" - they predict patterns

- Understanding this helps write better prompts

What materials do you need?

- Post-it notes.

- Markers

- One die per table

- Optional: the LLM mini board

What do you need to know as a trainer?

Make sure you’re at ease explaining LLMs in simple terms, how they predict next words, what they are and what they are not. I have prepared a Trainer's Guide about LLMs to help you.

Step-by-Step Guide

1. Setup

Ok, the only way to truly understand an LLM is to become one. Each table will represent a different LLM—like GPT-3, Claude, Gemini, or Mistral.

- Create teams of 3-4 participants

- Each team is now... an LLM!

- Give each table Post-its and markers

2. Explain the Process

Do the first step of the process with everyone, make them:

- Write "The dog" on a starting Post-it

- Brainstorm 3 likely next words and write each on a new Post-it

- Roll die to select one and circle the chosen word

- Use that as input for next prediction

3. Generate Text

Now each tean is one their own, they will build their sentence step by step. Continue for 3-4 iterations, until they have a complete enough sentence.

4. Share & Compare

Teams read their final sentences and compare different paths taken.

5. Debrief

Guide discussion around key insights:

- LLMs just predict likely next words

- No real understanding, reasoning, or planning

- Predictions based on patterns in training data

- Role of randomness in outputs

See the Trainer's Guide about LLMs for more about LLMs.

Facilitation Tips

Make it Fun

- Play up the roleplay aspect

- Keep iterations quick and flowing

- Optionally have each team pick a (cool or funny) name for their "AI Assistant"

Keep it hands on!

It is important that participants actively write the words, roll the die and circle the chosen word. Compared to simply explaining LLMs, they will feel and understand better how it works.

Variants

Explain Hallucinations

I love this variant!

After they have played the game, show the Hallucination Risk Card, explain that an LLM that hallucinates is basically a when it predicts the wrong next word. While they are in the shoes of the LLM, ask them to hallucinate the next word and see how the whole sentence becomes nonsense.

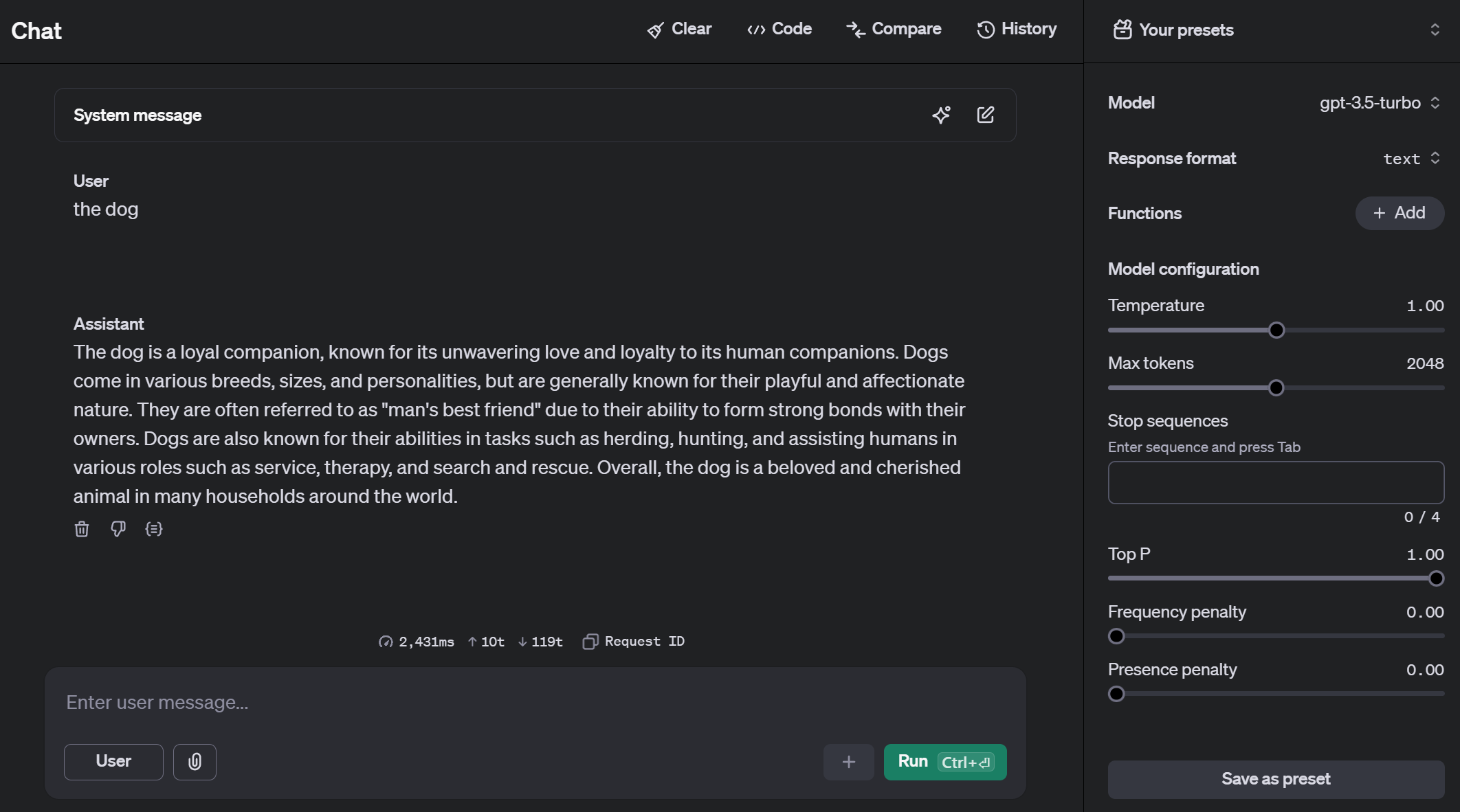

Make a demonstration on OpenAI Playground

You can also demonstrate on a simple LLM like OpenAI's Playground to show how the LLM works. Make sure to use a small early generation model like GPT-3.5-turbo.

Play with the temperature to show how it affects the randomness of the output and possible hallucinations.

For Large Groups

- Use digital tool like Stormz, Slido or Mentimeter to collect and display sentences.

- Word Cloud: Even better, in the Starter Kit you will find a Stormz template that displays the sentences on a word cloud, you'll see which words are most popular and by clicking on the word you'll see the full sentence. Both entertaining and educational.

For Advanced Groups

- Add "temperature" discussion (how die roll size affects randomness)

- Explore how context length affects predictions

- Compare to actual LLM outputs

What's Next

I usually follow this activity by a short presentation (the only one that I do!) to teach them more about LLMs (context window, training data, etc.).

After understanding how LLMs work, participants can:

- Move to Task Mapping to identify use cases

- Learn prompt engineering fundamentals

- Start experimenting with real AI tools

Resources

- LLM Trainer's Guide for understanding LLMs